OpenAI's new Ph.D level models

Welcome, AI Enthusiasts.

OpenAI’s new series models have Ph.D level thinking ability. It has shown significant performance improvements over GPT-4o in several benchmarks.

You can create your own synthetic voices to your liking instead of cloning someone else’s voice. But, is it required? Lets get into it..

In this week’s AI Catalyst insights :

OpenAI’s new Ph.D level models

A Voice bot that can detect emotions

Adobe’s new Firefly video model

More AI news

OpenAI’s new Ph.D level models

OpenAI unveiled its PhD-level reasoning models, named o1 series models. The new o1 models were developed by OpenAI under the project named "Strawberry".

The Specifics:

OpenAI o1 models comes in two variants :

o1 mini : Small, fast and 80% cheaper than the o1-preview.

o1 preview : Larger and designed for advanced reasoning and

complex problem-solving.

The speciality of this new series of models is its ability to think more like a human and so does its reasoning capacity. So when you think and answer, naturally it is going to be slow and yes these models are slower than GPT-4o.

When the models think better, they use more computational power and so the cost goes high.

On the bright side, although these models are slow they have shown significant performance in Olympiad and Code benchmarks.

For instance, the new o1 models have achieved 83% accuracy in the MATH Olympiad whereas GPT-4o achieved only 13% accuracy. Well, this shows their ability for deep thinking and problem-solving skills.🔥

Thanks to the Reinforcement learning process which made these o1 models solve independent complex tasks via rewards and penalties.

Currently, these models are only available for ChatGPT Plus and Teams users.

Key Point:

OpenAI o1 series models are not designed to be fast but to spend more time thinking through problems before responding, much like a human would. These models can tackle more difficult problems and provide higher-quality outputs, in spite of the cost of increased processing time compared to previous models like GPT-4o.

A Voice bot that can detect emotions

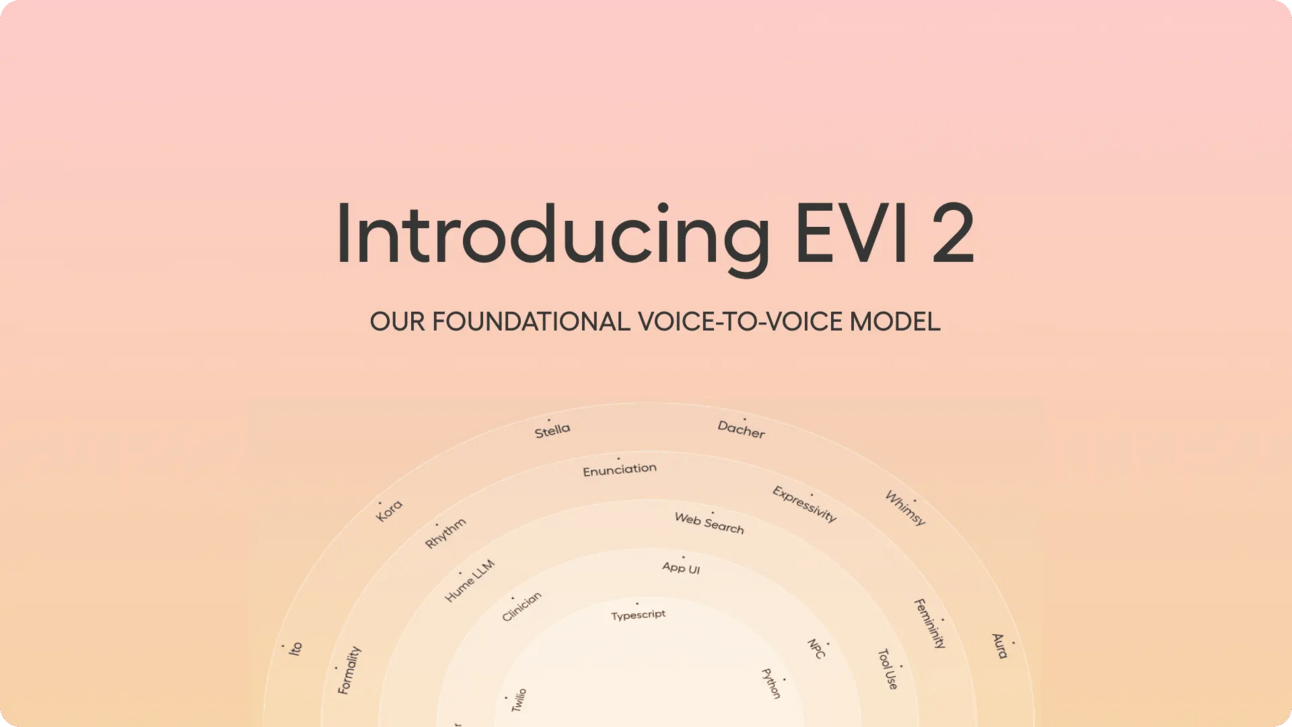

Hume AI, releases Empathic Voice Interface 2 (EVI 2), a voice-to-voice foundation model which integrates voice and language processing.

The Specifics:

You can have a human-like conversation with EVI2 using natural language processing and impressive multilingual capabilities.

EVI2 is built in such a way that it can understand the emotions in your voice and respond accordingly. It also knows when to speak without cutting you off. Instead, you can interrupt EVI2 in the middle at any time to sing or rap a song for you. Well, we have seen this before with ChatGPT voice mode, isn't it?

But EVI2 is not just a voice-enabled character, instead EVI2 enables a voice interface which allows users to create their voices based on the inbuilt 7 base voices by changing some attributes like Gender, Huskiness, Nasality and Pitch. So cool, right?

Users can now create their synthetic voices for any use cases where a voice character is required. It could be for teleconferencing, AI voice-over etc.

HumeAI made it deliberate to not clone any voice. If someone needs to clone a specific person's voice, firstly it is disabled by default; secondly, it is only possible by modifying the underlying code, which is a safety measure from HumeAI. Applaudable!

Key Point:

EVI2 is not just another voice character but it represents a whole new voice creation interface that empowers users to generate synthetic voices tailored to users requirements. Creating a synthetic voice without cloning especially when the demand for Synthetic voices is on the rise is superb!

Adobe’s new Firefly video model

Adobe has announced a groundbreaking advancement in video editing technology by introducing the Firefly Video Model.

The Specifics:

You can create B-roll footage using text prompts, camera controls, and reference images. This feature seamlessly fills gaps in timelines and allows for quick exploration of visual effects ideas.

Image to Video: Transform pictures and illustrations into dynamic live-action clips.

Soon in Premiere Pro Beta, editors can extend clips, do smooth transitions and adjust shot durations for perfect timing.

This model is designed to be commercially safe for creators. Training data is sourced only from public domain or licensed content but never from users' content.

The Firefly Video Model capabilities will be available in beta later this year, integrated across Adobe Creative Cloud, Digital Marketing, and Express workflows.

Key Point:

Adobe Firefly video model, represents a significant leap forward in video editing technology, offering creators powerful new tools to realise their creative visions more efficiently and push the boundaries of what's possible in video production.

More AI News

The White House met with leaders from major AI companies to discuss strategies for maintaining U.S. leadership in AI infrastructure. During this meeting, the administration announced the formation of a new AI task force focused on infrastructure and energy, aiming to address the challenges posed by AI's growing energy demands and to promote collaboration between the public and private sectors.

Google Deepmind's team has taught Robots certain tasks to increase their Robotics skills. The tasks include: Tying shoelaces; Insert gears; Replace parts on another robot; Hang up shirts and Clean up the kitchen. Although they are far from perfect, this advancement is a major leap towards advancement.

Waymo and Uber are set to introduce fully autonomous ride-hailing services in Austin and Atlanta, allowing Uber users to hail Waymo's all-electric Jaguar I-Pace cars starting in early 2025. This partnership marks a significant step in the deployment of self-driving technology.

FEEDBACK

We welcome your feedback and insights. Please reply to this email with any comments or suggestions you'd like to share.

Appreciate your time.

Looking forward to next time!

Naveen Krishnan

The AI Catalyst Inc.

/